High-Performance Computing Center Stuttgart

Through an agreement just signed with HPE, HLRS will now expand this world-class CPU-based computing system by adding 24 HPE Apollo 6500 Gen10 Plus systems with 192 NVIDIA A100 GPUs based on the NVIDIA Ampere architecture. The addition of 120 petaflops of AI performance will not only dramatically expand HLRS's ability to support applications of deep learning, high-performance data analytics, and artificial intelligence (AI), but also enable new kinds of hybrid computing workflows that combine traditional simulation methods with Big Data approaches.

The expansion also offers a new AI platform with three times the number of NVIDIA processors found in HLRS's Cray CS-Storm system, its current go-to system for AI applications. It will enable larger-scale deep learning projects and expand the amount of computing power for AI that is available to HLRS's user community.

"At HLRS our mission has always been to provide systems that address the most important needs of our key user community, which is largely focused on computational engineering," explained HLRS Director Dr.-Ing. Michael Resch. "For many years this has meant basing our flagship systems on CPUs to support codes used in computationally intensive simulation. Recently, however, we have seen growing interest in deep learning and artificial intelligence, which run much more efficiently on GPUs. Adding this second key type of processor to Hawk's architecture will improve our ability to support scientists in academia and industry who are working at the forefront of computational research."

In the past, HLRS's flagship supercomputers — Hawk, and previously Hazel Hen — have been based exclusively on central processing units. This is because CPUs offer the best architecture for many codes used in fields such as computational fluid dynamics, molecular dynamics, climate modeling, and other research areas in which HLRS's users are most active. Because the outcomes of simulations are often applied in the real world (for example, in replacing automobile crash tests), simulation algorithms must be as accurate as possible, and are thus based on fundamental scientific principles. At the same time, however, such methods require large numbers of computing cores and long computing times, generate enormous amounts of data, and require specialized programming expertise to run efficiently.

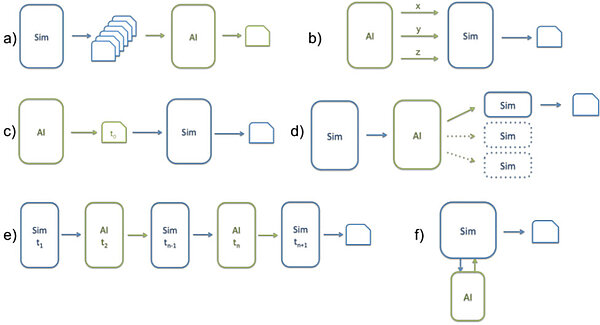

In recent years, researchers have begun exploring how deep learning, high-performance data analytics, and artificial intelligence could accelerate and simplify such research. As opposed to simulation, in which a complex system is modelled in a "top-down" way based on scientific principles, these newer methods use a "bottom-up" approach. Here, deep learning algorithms identify patterns in large amounts of data, and then create a computational model that approximates the behavior of the actual data. The "trained" model can then be used to predict how other systems that are similar to those in the original dataset will behave. Although such models are not guaranteed to be as precise as simulations based on first principles, they often provide approximations that are close enough to be useful in practice, complementing or sometimes even replacing more computationally intensive approaches.

For technical reasons, such deep learning approaches do not run efficiently on CPU processors, but benefit from a different type of accelerator called a graphic processing unit. Originally designed to rapidly re-render screens in action-packed video games, GPUs are also capable of analyzing the large matrices of data required for algorithms using neural networks. Whereas CPUs are best suited for diverse calculations, GPUs are better at flying through repetitive tasks in parallel. In deep learning and AI this can mean simultaneously comparing hundreds of thousands of parameters in millions of large datasets, identifying meaningful differences in them, and producing a model that describes them.

Although some have speculated that AI could eventually replace high-performance computing altogether, the truth is that some of the most interesting research methods right now lie in combining these bottom-up and top-down approaches.

Deep learning algorithms typically require enormous amounts of training data in order produce statistically robust models — a task that is perfectly suited to high-performance computers. At the same time, models created using deep learning on GPU-based systems can inform and accelerate researchers' use of simulation. Learning algorithms can, for example, reveal key parameters in data, generate hypotheses that can guide simulation in a more directed way, or deliver surrogate models that substitute for compute-intensive functions. New research methods have begun integrating these approaches, often in a circular, iterative manner.

In 2019 HLRS made a significant leap into the world of deep learning and artificial intelligence by installing an NVIDIA GPU-based Cray CS-Storm system. The addition has been well received by HLRS's user community, and is currently being used at near capacity.

Although the Cray CS-Storm system is well suited for both standard machine learning and deep learning projects, the experience has nevertheless revealed limitations in using two separate systems for research involving hybrid workflows. Hawk and the Cray CS-Storm system use separate file systems, meaning data must be moved from one system to the other when training an AI algorithm or using the results of an AI model to accelerate a simulation. These transfers require time and workflow interruptions and that users master the ability to program two very different systems.

"Once NVIDIA GPUs are integrated into Hawk, hybrid workflows combining HPC and AI will become much more efficient," said Dennis Hoppe, who leads artificial intelligence operations at HLRS. "Losses of time that occur because of data transfer and the need to run different parts of the workflows in separate stages will practically disappear. Users will be able to stay on the computing cores they are using, run an AI algorithm, and integrate the results immediately."

HLRS users will also be able to leverage the NVIDIA NGC™ catalog to access GPU-optimized software for deep learning, machine learning, and high-performance computing that accelerates development-to-deployment workflows. Furthermore, the combination of the new GPUs with the In-Networking Computing acceleration engines of the HDR NVIDIA Mellanox InfiniBand network enables leading performance for the most demanding scientific workloads. Hoppe also anticipates that in the future the new GPUs could be more easily used to run certain kinds of traditional simulation applications more quickly.

Several scientists in HLRS's user community have begun pursuing projects that combine simulation using high-performance computing and deep learning. The following are a few examples illustrating the potential impact of this approach.

Before a die is cast to press sheet metal into a side panel for the body of an automobile, engineers must comprehensively understand how the sheet metal will perform under physical loads during fabrication. Using only experimental methods to design forming dies and fine-tune press lines’ parameters would be far too expensive and time consuming, and so simulation using HPC has for many years helped to accelerate this development process. Dr. Celalettin Karadogan and colleagues in the University of Stuttgart's Institute for Metal Forming Technology seek to make such virtual design more realistic and accessible to producers everywhere. Using real-world testing data related to metal behavior, the researchers have begun using HPC to generate what will become up to 2 billion simulations of material performance. They will then use the data to train a neural network that learns to define and evaluate relevant behavior for any kind of metal. The resulting simplified model would provide a tool that any sheet metal user or producer could potentially run on a standard desktop computer to meet a specific client's needs.

Ludger Pähler in the laboratory of Dr. Nikolaus Adams at the Technical University Munich is conducting basic studies of the deflagration to detonation transition, an event in which combustion suddenly turns into a violent explosion. The phenomenon, which is relevant for understanding supernovae and confinement-based approaches to nuclear fusion, was also a factor in the August 2020 warehouse explosion that destroyed parts of Beirut, Lebanon. Pähler is combining statistics and machine learning in programs for inferring how different inputs into a simulation of the deflagration to detonation transition lead to the resulting outputs. The work, which relies on high-dimensional datasets and requires extreme-scale inference, has already produced hundreds of thousands of sample models of the transition. To make the investigation computationally manageable, however, he is using a method called reinforcement learning to identify meaningful regions in the complex reactions that should be sampled. This will make the inference routine more intelligent and computationally tractable, and would dramatically reduce the cost and computational burden of analyzing the entire reaction. Pähler anticipates that the new Hawk architecture should make it possible to efficiently retrain the reinforcement learning model, funneling feedback from new simulations back into the model in ways that enable it to understand and react to how well it is sampling.

Simulation is essential for modern fluid dynamics research in fields like aerodynamics and combustion. Typically, researchers divide an area or structure they are studying into a mesh and then calculate small-scale interactions within each “box.” In one approach called direct numerical simulation (DNS), supercomputers like Hawk enable researchers to model a fluid's movement without any assumptions or input data. However, DNS requires enormous computing power that is often not accessible to power companies or engineers in industries that would also benefit from this kind of information. Dr. Andrea Beck and colleagues in the University of Stuttgart’s Institute for Aerodynamics and Gas Dynamics are using Hawk to perform high-accuracy DNS simulations, and then using the resulting data to train neural networks to improve a less computationally demanding approach called large-eddy simulation. The results could lead to a more accessible means for performing sophisticated analyses of turbulence and flow.

— Christopher Williams