High-Performance Computing Center Stuttgart

One of the instruments aboard Envisat was the Michelson Interferometer for Passive Atmospheric Sounding (MIPAS), which measured the infrared radiation emitted by the Earth’s atmosphere. These measurements help scientists better understand the role greenhouse gases play in our atmosphere.

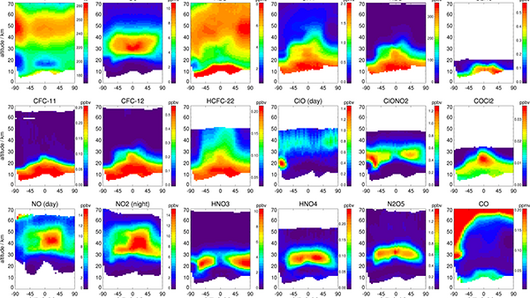

Since many gases found in the atmosphere exhibit characteristic “fingerprints” in these high-resolution spectra, each set of spectra, recorded at a given location, allows researchers to obtain the vertical profiles of more than 30 key gases.

During each of the satellite's 14 daily orbits, the instrument took measurements at up to 95 different locations, each of them consisting of 17 to 35 different spectra corresponding to altitudes from 5 to 70 km, but sometimes reaching up to 170 km.

Taken together, a decade of compressed MIPAS spectral data fills roughly 10 terabytes. In order to analyze such a massive amount of data in a timely manner, researchers at the Karlsruhe Institute of Technology (KIT) and the Instituto de Astrofísica de Andalucía (IAA-CSIC) turned to the power of high-performance computing (HPC). The team partnered with the High-Performance Computing Center Stuttgart (HLRS) to securely store their large dataset and used the center's supercomputing resources to model and analyze MIPAS’s infrared spectra.

“Using HLRS's supercomputer we were able to assemble a complete dataset for our 10-year time series quickly and thoroughly,” said Dr. Michael Kiefer, researcher at KIT and principal investigator for the project. “We could attempt to do this work on cluster computers, but the processing would take years to get the result. With HPC we can quickly look at our results and extract a wider variety of chemical species from the measured spectra. This isn’t just a quantitative improvement, but also a qualitative one.”

In recent years, scientific computing has entered a new phase of development. For decades, computing advancements were rooted in the idea of Moore’s Law—a prediction by Gordon Moore that the shrinking size of transistors would make it possible to double the number of transistors on a computer chip every two years. This, he proposed in 1965, would lead to a nearly exponential increase in computing power over the decades to come. While Moore was correct for several decades, the last 10 years brought this trend to an end.

As luck would have it, however, raw computing power was also no longer what many researchers needed most. Today, solving scientific challenges is often no longer limited by processing speed, but rather by the need to efficiently transfer, analyze, and store large datasets.

This holds true for the MIPAS researchers, who must process and analyze a decade of data tracking 36 different chemical species and temperature. The team’s work is made even more complex by the complex relationship trace gases have with one another at higher altitudes—researchers must chart the interplay of temperature, radiation, concentrations of other chemicals, and how all these characteristics influence one another. As a result, the team has to do computationally expensive non-local thermodynamic equilibrium (NLTE) calculations for these species. Water vapor alone required roughly one million core hours for these calculations, and the team had to model nine species using NLTE methods.

While these calculations could individually be performed on more modest computing resources, they would collectively take far too long without access to HPC resources. “Earlier in the project we were just working on a local cluster to run a month of data from the upper atmosphere, where this complex NLTE is required,” said Dr. Bernd Funke, a senior researcher at IAA and collaborator on the project. “Getting results for one month of data could take almost one month of computation. Now we can run these things in two or three nights. From a scientific point of view, this quick access to the data is extremely valuable.”

Both Kiefer and Funke indicated that HLRS computing resources—as well as the ability to store their data on HLRS’s fast and secure High-Performance Storage System—enabled the team to rapidly analyze its data.

As the researchers finish their analysis of the MIPAS dataset, they anticipate that the near future will see new mid-infrared space missions. Considering the massive datasets they expect these missions to produce, HPC centers like HLRS will continue to play a major role in hosting, processing, and analyzing the data.

Future missions such as the Earth Explorer candidate mission CAIRT, recently selected by ESA for pre-feasibility studies, will use imaging methods, which increase the number of measurements per orbit and add two additional dimensions to the data. This will not only give researchers an even more detailed view of atmospheric composition and processes, but also greatly increase the complexity and volume of data analysis that will be required. The researchers estimate that one of the projected instruments could result in up to a 1,000-fold increase in datapoints gathered.

The team also indicated that HLRS was quick to embrace their relatively “non-traditional” need for supercomputing resources. The current pivot across the sciences to even more data-centric HPC applications underscored the need for HPC centers to provide a suite of tools in the realms of data storage and management.

The entire MIPAS data set of chemical species in the atmosphere can be accessed at https://www.imk-asf.kit.edu/english/308.php

-Eric Gedenk

Funding for Hazel Hen was provided by Baden-Württemberg Ministry for Science, Research, and the Arts and the German Federal Ministry of Education and Research through the Gauss Centre for Supercomputing (GCS).