High-Performance Computing Center Stuttgart

Uncertainty is not just a concept in high-performance computing, of course. Other disciplines including economics, politics, philosophy, and science all have long traditions of reckoning with it in different ways. A conference held at the the High-Performance Computing Center Stuttgart (HLRS) on July 28-30, 2025 sought to draw on such multidisciplinary expertise, exploring how dialogue among scholars in the humanities, social sciences, and computational sciences could shed new light on the concept of uncertainty. This conference was the latest in the series “The Science and Art of Simulation” (SAS25), an annual meeting that has taken place at HLRS since 2017.

Nico Formanek, who organized the event as head of the HLRS Department of Philosophy of Computational Sciences, explained that interest in uncertainty emerged from the HLRS research project Modeling for Policy, and is closely related to the HPC center’s other activities. “Philosophy has a tradition of dealing with uncertainty, focusing on the question of the logical foundations of probability,” he said. “When thinking about simulation it also has practical relevance, as it is important to understand how decisions made in simulation pipelines lead to different outcomes, as well as what could be improved in these processes.”

For philosopher John Symons of the University of Kansas, artificial intelligence presents an epistemological problem for science. Traditional scientific methodologies have typically created deterministic models; that is, models in which the same inputs always produce the same outputs. In a keynote talk Symons showed why it is not possible for simulation — and particularly artificial intelligence — to eliminate uncertainty completely. Instead, he suggested that science must learn to embrace probabilistic approaches to reasoning, and to develop more reliable methods for validating and assessing the trustworthiness of AI-generated knowledge.

One practical application of probabilistic models is in hydrological modeling, an approach used to identify vulnerabilities to flooding in communities. The complexity of weather, geology, and city development, however, means that predicting what will happen during a specific storm with 100% accuracy is impossible. Talks at the SAS25 conference by Anneli Guthke and Manuel Alvarez Chaves, members of the University of Stuttgart’s SimTech project, explained the challenges of understanding uncertainty in probabilistic hydrological models and approaches to improve uncertainty quantification. Further development of such approaches could help policy makers and residents who live in flood-prone areas to make more informed decisions about risk and its management.

Several lectures at the conference provided cautionary insights about whether eliminating uncertainty in simulation should be considered a desirable goal. In a keynote address, ethicist Marcus Düwell of the Technical University Darmstadt argued that uncertainty is a necessary element of the human condition. Becoming overly reliant on certainty in simulation risks causing threats to individuality, which he suggested could also injure the functioning of democratic societies.

For Quanrong Gong, a philosopher at the University of Cincinnati, uncertainty is also a necessary element in collaboration and joint action. Partners in such relationships depend on others to contribute capabilities they don’t themselves have. Gong argued that the extent of these capabilities and their compatibility is unclear at the start of such collaborations, introducing a type of uncertainty that can lead to unforeseen positive outcomes.

Ramon Alvarado of the University of Oregon also questioned the wisdom of completely eliminating uncertainty, which he pointed out could reduce serendipity, a factor in many revolutionary discoveries in the history of science. In artificial intelligence, which today can efficiently deliver plausible answers to many questions, he anticipates a potential loss of such chance occurrences. What will we miss as a result of our increasing reliance on AI, he asked, and how could the factor of serendipity be built into it in the future?

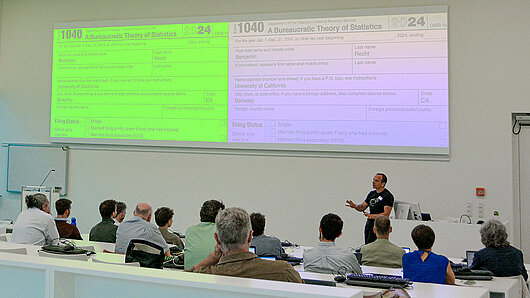

In a third keynote lecture, Ben Recht, a machine learning expert at the University of California, Berkeley, investigated more closely the relationship between statistical methods and how they are used in practice. He proposed that statistics only gain meaning through their integration into bureaucratic structures and processes. In randomized clinical trials in medicine, for example, he argued that “ex ante policies” establish statistical rules and procedures that guide data collection and ultimately affect how it is analyzed and interpreted. Based on this premise, he suggested new directions for research in statistical methodology for policy.

Recht’s arguments are an example of the kinds of insights that can emerge when the computational sciences, social sciences, and humanities meet in HLRS’s Science and Art of Simulation conferences. For Formanek, the event speaks to the core of HLRS’s mission to increase the impact of supercomputing in the public sphere: “The SAS conferences help to ensure that simulations running on our computers are more understandable and more helpful in society.”

These short summaries are just a sampling of the diverse topics considered during the SAS25 conference on uncertainty. The full conference proceedings will be collected in an upcoming book to be published by Springer Verlag. It is possible to read the proceedings of past Science and Art of Simulation conferences by visiting our HLRS Books page.

— Christopher Williams