High-Performance Computing Center Stuttgart

In a new project called TOPIO, Schwitalla is working together with HLRS scientists to test an approach that could improve the I/O of large-scale simulations. In the following interview, he describes the opportunities and challenges that the march toward exascale poses for HPC.

Because of the computing resources that were available, in the past it was often the case that it was only possible to conduct high-resolution, sub-seasonal simulations — simulations of between 4 weeks and 2 months — using Limited Area Models (LAM). That is to say, one was only able to simulate one section of the Earth at a time. This always led to a mathematical problem because one needed boundary conditions that were borrowed from another global model. Often, the physics of an LAM was different from that of the driving model. When, for example, clouds of the boundary layer are represented differently, it can result in unwanted effects. In longer simulations the boundary conditions can sometimes “shine through” and you aren’t looking at the model itself anymore.

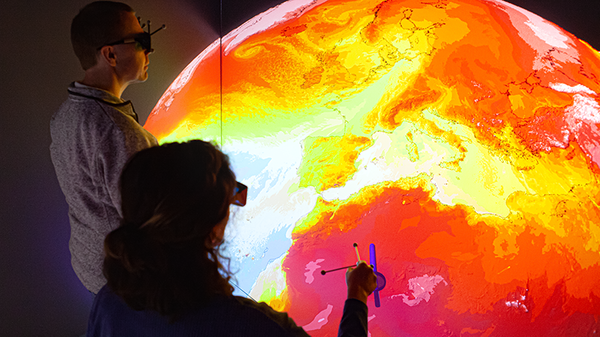

The advantage of a global model is that it has no boundary conditions. And what is interesting today is that one can compute a global simulation using a very small mesh size, or to put it another way, at a very high resolution. To do this, however, you need enormous computational resources.

Currently there are global climate simulations that run at a resolution of 25 to 50 km. This is good, but they aren’t sufficient if you want to look at the local level. In the Black Forest, for example, there are a lot of mountains and valleys, and a resolution of 25 km is not capable of capturing this complex topography. You also see this when looking at archipelagoes like in the Philippines, Indonesia, or the Canary Islands, where a mesh size with a resolution of 25 km simply can’t represent smaller islands.

The hope is that higher resolution models will make it possible to make more accurate predictions. You can represent atmospheric processes in a much more realistic way, which can help, for example, in predicting tropical storms or sudden heavy rain events. Being able to know what is going to happen in the next 30 to 60 days is also of great interest for agriculture, because farmers want to know the best time to seed or harvest. In one EU-funded project, researchers have conducted the first 40-day simulations and have shown that a higher resolution has a significantly positive effect on the prediction of precipitation.

If you were to divide the entire surface of the Earth into 1.5 x 1.5 km boxes, using MPAS you would have 262 million cells. In the configuration I use, 75 vertical layers are also needed to simulate the atmosphere. This means that I have 262 million x 75 cells; that is, approximately 20 billion cells. To model weather realistically, in every one of those cells I need to know the temperature, the air pressure, the wind, and the relative humidity. In addition, you need to account for variables like cloud water, rain, ice, or snow. Every one of these variables has 20 billion data points that need to be saved. Even when computing by using single precision (a format in which floating points are saved using 32 bits instead of 64 bits) the resulting file is approximately a terabyte in size per time step in the output. When simulating 24 hours of time with one file export for every simulated hour, you would have 24 terabytes of data. For a simulation of 60 days that means 1.3 petabytes.

Such huge amounts of data pose a challenge for data systems. During simulations using 10,000 or 100,000 compute cores you run into the situation that on the one hand, the communication between MPI processors isn’t fast enough to keep up, and on the other that the data system cannot process the data fast enough because of the limited memory bandwidth.

In TOPIO we want to investigate whether it is possible to accelerate the I/O of the data system by using an autotuning approach for data compression. Algorithms for data compression already exist, but they have not yet been parallelized for HPC architectures. We want to determine if such approaches could be optimized using MPI. Scientists from the EXCELLERAT project, which HLRS is leading, have developed a library for compression called BigWhoop, and in TOPIO we will apply the library on MPAS. The goal is to reduce data size without losing information by up to 70%.

This last point is very important for atmospheric modeling. What is particularly difficult is that specific variables such as water vapor, cloud water, or cloud ice are measured in very small values. If I lose a place in a number during data compression, it can lead to differences in the information that comes out of a longer simulation, as well as the resulting statistical analysis. The problem is also relevant when you compare simulations with satellite data. When information is lost because of compression it can mean, under certain conditions, that specific features simply go missing.

Reducing the amount of data is also extremely important for users of the information, for example in agriculture or flood prediction centers. If the datasets are large and difficult to transfer, no one will use them. If the data are compressed, chances are higher that they will be used as a part of decision-making processes. Another positive side effect is that this can reduce the energy necessary to save and archive the data.

In the future, scientists would like to be able to better estimate uncertainties in their models. We see this need, for example, in cloud modeling. How clouds develop is very important for the climate because clouds have a big influence on the Earth’s radiation balance. If the resolution of a model is coarse, it is not capable of representing small clouds. In some cases this can even mean that the radiation balance is not simulated correctly. If you use this information as part of a climate scenario to project what will happen in the next 100 years, the danger is that the mistake can become large.

Ensemble simulations can help to estimate such uncertainty. In multiphysics ensembles, for example, one might select a variety of different parameter settings for representing clouds or atmospheric processes at the boundary layer. Another alternative is the time-lagged ensemble, where you could start a second simulation 24 hours later (for example) and compare the results with those of the first. If the results converge, one can be more confident that the model is trustworthy.

Running ensemble models, however, is very computationally intensive. If I wanted to compute an ensemble of 10 individual models, I would need 10 times the compute time and the resulting data set would be 10 times as large as running a single simulation. Computing power is therefore still a limiting factor. Because of this we are going to see a greater need for GPUs, because they are well suited for floating point operations and much faster than CPUs. Many global models now use a combination of CPUs and GPUs, and in the coming years we can expect to see more mixed systems that contain both CPU and GPU components.

This transformation also has consequences for how simulation software is programmed. One can’t just simply port the same existing code directly onto GPUs. It must be adapted to be able to run on GPUs. Currently there are a number of approaches for doing this, including OpenACC, CUDA, and OpenMP offloading. This means that even scientists who are experienced in using HPC will need to adopt these new methods. It is great to see that HLRS has already begun addressing this need in its HPC training program.

— Interview by Christopher Williams