High-Performance Computing Center Stuttgart

The Gordon Bell Awards typically recognize simulations run on the world’s largest computers, and in this case, the KAUST team turned to Fugaku — a supercomputer located at Japan’s RIKEN Center for Computational Science that is currently the world’s second largest system — to complete the run cited in the list of award finalists. In order to gain access to this system, however, the researchers first needed to prove their method’s effectiveness and scalability. To do this, they tested it on other HPC systems with a variety of architectures, including the High-Performance Computing Center Stuttgart’s (HLRS’s) Hawk supercomputer, one of the first supercomputers worldwide to use AMD hardware, which delivers an unprecedented cache capacity and bandwidth.

“For new algorithmic advances to be adopted by the scientific community, they need to be portable to a variety of high-performance computing architectures, and Hawk represents an important architecture for us to port to,” said Dr. David Keyes, director at the KAUST Extreme Computing Research Center (ECRC) and principal researcher on the KAUST team’s project. In addition to Hawk, they also tested their method using ECRC’s Shaheen-2 supercomputer and on Summit at Oak Ridge National Laboratory in the United States.

While in pursuit of computer science gold, the team wanted to ensure that its efforts would benefit research that corresponded with KAUST’s core mission, which led to it focusing on how to improve the performance of global climate models.

Since 2012, the world of high-performance computing (HPC) has increasingly turned to the rapid calculation capabilities of graphics processing units (GPUs) in the pursuit of performance gains. On the Top500 list released in June 2022, most of the fastest 20 machines used GPUs or similar accelerators to achieve world-leading computational performance. Nevertheless, simulations’ increasing complexity still limits how much detail researchers can include in ambitious, large-scale simulations, such as global climate models.

With more powerful computers, climate scientists want to integrate as many factors as they can in their simulations—anything from cloud cover and historical meteorological data to sea ice change and particulate matter in the atmosphere. Rather than calculating these elements from first principles, an approach that even today would be computationally expensive, researchers include this information in the form of statistical models. The KAUST researchers focused specifically on a subset of statistical modelling, called spatial statistics, which connects statistical data to specific geographic regions in a simulation. While this approach lowers the burden of calculating many physics equations, even the world’s leading systems still buckle under the sheer volume of linear algebra—in this case, solving large, complex mathematical matrix-based problems—needed to solve these spatial statistical models.

Recently, GPUs have offered new options for dealing with such data-intensive applications, including artificial intelligence (AI) workflows. In addition to being able to solve relatively simple calculations very quickly, innovative algorithms can leverage GPUs to run large, complex simulations more efficiently than traditional CPUs by reducing accuracy in certain parts of a simulation while performing computationally expensive calculations in other parts where they are most needed. Working with computational scientists, the KAUST team developed new ways of reducing the computational cost of these large simulations without sacrificing accuracy.

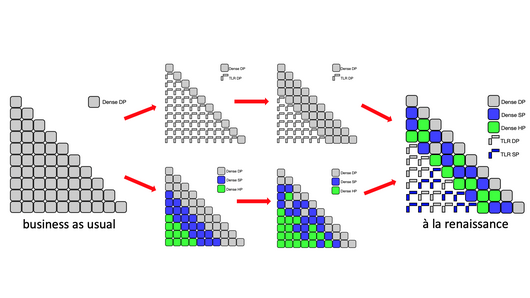

Traditional HPC applications perform “double-precision” calculations, which occupy 64 bits of computer memory (FP64). Calculations that can still provide important input to a simulation but are less exact than FP64 are called single-precision and take up just 32 bits of memory (FP32). Beyond these two poles, researchers are developing computational approaches that use various levels of precision, depending on the level of detail needed for certain calculations within a simulation. These so-called mixed precision algorithms can provide the best of all worlds when developed and implemented correctly. In fact, they can even support more than these two typical precisions for HPC, going as far as “half-precision,” or FP16.

“With the advent of AI applications in 2012, there was a huge push to get GPUs to support lower precision calculations more effectively,” said Dr. Hatem Ltaief, principal research scientist at KAUST’s ECRC. “We realized that we needed to get on this train or let it pass us by. We started working with application scientists to show how their applications could tolerate some selective loss in accuracy and still run as if everything had been done in 64-bit precision. Identifying these places in complex workflows is not easy, and you have to have domain scientists involved to ensure that algorithm developers like ourselves are not just shooting in the dark.”

Keyes, Ltaief, Prof. Marc Genton, Dr. Sameh Abdulah, and their team at KAUST started working with researchers at the University of Tennessee’s Innovative Computing Laboratory to not only efficiently incorporate spatial statistics into climate models, but also to identify tiles in the matrix where they could adaptively raise and lower the accuracy of their calculations throughout a simulation by using half, single, or double precision in an adaptive manner—essentially, identifying places where lower precision calculations would still yield accurate results, saving researchers from “oversolving” their simulations.

This method relies on the PaRSEC dynamic runtime system for orchestrating the scheduling of computational tasks and on-demand conversion precision arithmetic. This enables researchers to lower the computational burden on the parts of a simulation that are less significant or less relevant for the result in question. “The key to our recent developments is that we do not have to anticipate these application-dependent mathematical behaviors in advance. We can detect them on-the-fly and adapt our savings to what we find in interacting tiles in simulations,” Keyes said.

Through its work, the team found that it could get a 12-fold performance improvement against traditional state-of-the-art dense matrix calculations. “By looking at the problem as a whole, tackling the entire matrix, and looking for opportunities in the data sparsity structure of the matrix where we could approximate and use mixed precision, we provide the solution for the community and beyond,” Ltaief said.

The KAUST team’s algorithmic innovations will benefit computer scientists and HPC centers alike. New, large supercomputers are built each year, and their global demand for electricity continues to grow. As the world confronts the twin challenges of needing to reduce carbon emissions and a crisis in energy supply, HPC centers continue to look for new ways to lower their energy footprints. While some of those efforts focus on improving hardware efficiency—for example, by reusing waste heat generated by the machine or using warmer water to cool the system—there is a new emphasis on ensuring that simulations run as efficiently as possible and data movement is kept to a minimum.

“Data movement is really expensive in terms of energy consumption, and by compressing and reducing the overall precision of a simulation, we also reduce the volume of data motion occurring throughout the simulation,” Ltaief said. “We move from a computationally intensive, large dataset simulation to a memory-bound simulation operating on a dataset with a lower memory footprint and therefore, there is a lot of gain in terms of energy consumption.”

HLRS has long focused on looking for ways to reduce and reuse energy consumed by its supercomputers, so when Ltaief reached out to HLRS Director Prof. Michael Resch about the KAUST project, the team was able to test its method on Hawk’s architecture while the machine was in acceptance testing.

"As an international research center we are open to collaboration with world leading scientists, we are happy to contribute to the progress in our field of HPC,” said HLRS Director Prof. Michael Resch. “Working with the team of KAUST was a pleasure and an honour.”

Keyes pointed out that the team’s approach shows promise in being applied to other science domains, too, and that this dynamism is one of the most rewarding aspects of being a computer scientist. “Do linear algebra; see the world,” he said. The team has extended its approach to signal-decoding in wireless telecommunications, adaptive optics in terrestrial telescopes, subsurface imaging, and genotype-to-phenotype associations, and plans to extend it to materials science in the future.

For a full list of all 2022 Gordon Bell Prize finalists, click here.

-Eric Gedenk

Funding for Hawk was provided by Baden-Württemberg Ministry for Science, Research, and the Arts and the German Federal Ministry of Education and Research through the Gauss Centre for Supercomputing (GCS).