High-Performance Computing Center Stuttgart

Fluid dynamics research is well-suited to modeling and simulation. Many HPC simulations begin with a computational grid or mesh that allows researchers to break a large, complex problem into small equations that can be calculated in parallel, then quickly pieced back together. As machines have gotten more powerful, researchers have been able to make these computational meshes increasingly finer.

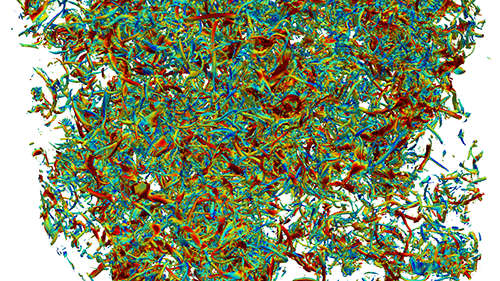

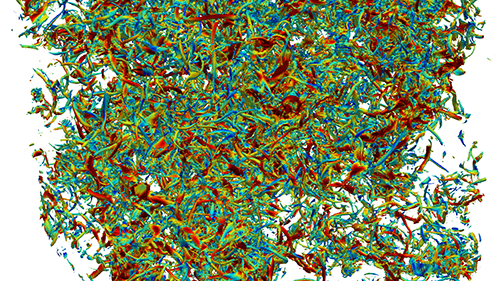

Now that computers—such as the Hawk system at the High-Performance Computing Center Stuttgart (HLRS)—are capable of quadrillions of calculations per second, researchers have gained the ability to create direct numerical simulations (DNS) that can model a fluid’s movements “from scratch” without the need for simplifying models or assumptions. When computing turbulence in fluid flows, DNS allows researchers to accurately simulate the tiniest swirling motions, or eddies, that influence large-scale movements in a fluid.

Doing this work, however, requires regular access to large-scale supercomputers. When researchers from industry, for example, want to get a rougher view of turbulent motions, they often run series of large-eddy simulations (LES), which calculate only the behavior of the larger swirling motions happening in a fluid. This approach also requires making assumptions based on empirical data or other models about the behavior of the smallest eddies. While this approach requires less computational power, researchers know that even the smallest eddies have a large impact on how the whole system behaves.

Recently, researchers led by Dr. Andrea Beck at the University of Stuttgart’s Institute for Aerodynamics and Gas Dynamics (IAG) have been exploring how machine learning could make the power offered by direct numerical simulations accessible to researchers with more modest computational resources. A long-time user of HLRS’s HPC resources for traditional modelling and simulation work, she has turned to using Hawk to generate data that is then used to help train neural networks to improve LES models. Her experiments have already begun showing potential to accelerate fluid dynamics research.

The difference between modelling turbulence using LES methods and DNS methods is similar to photographs. One can think of DNS as a high-resolution photograph, whereas LES would be a rougher, more pixelated version of that same image. However, unlike a photograph, researchers do not always have access to the high-resolution version of a given system from which to develop an accurate representation in lower resolution. To fill in this gap in fluid modeling, researchers have to look for “closure terms” to fill in the gaps between DNS and LES calculations.

“To use a photograph analogy, the closure term is the expression for what is missing between the coarse grained image and the full image,” Beck said. “It is a term you are trying to replace, in a sense. A closure tells you how this information from the full image influences the coarse one.” Just like a processed image, though, there are many ways in which LES turbulent models can diverge from a DNS, and selecting the correct closure term is essential for the accuracy of LES.

The team started training an artificial neural network, a machine learning algorithm that functions similarly to how the human brain processes and synthesizes new information. These methods involve training algorithms with a collection of large datasets, often generated by HPC systems, to forge connections similar to those formed in the human brain during language acquisition or object recognition during our early years of life. These types of algorithms are training in a “supervised” environment, meaning that researchers feed in large amounts of data where they already have the solution, ensuring that the algorithm is always given the correct answer or classification for a given set of data.

“For supervised learning, it is like giving the algorithm 1,000 pictures of cats and 1,000 pictures of dogs,” Beck said. “Eventually, when the algorithm has seen enough examples of each, it can see a new picture of a cat or a dog and be able to tell the difference.”

For its work, the team did a series of DNS calculations on HLRS HPC resources. They did roughly 40 runs on the Hawk and Hazel Hen supercomputers at HLRS., using about 20,000 cores per run, then used this data to help train its neural network. Because this data could be useful for other researchers, the team is making the dataset publically available in 2021. The team compared two different training approaches in its work, and found that one approach achieved 99% accuracy in correctly pairing the correct closure term with a given LES filter function.

With such promising results, the team feels confident that these “data-driven” modeling approaches can be further refined to help bring the accuracy of HPC-driven DNS results to more modest simulation approaches. The team’s work serves as an example of an emerging trend in science and engineering—researchers are increasingly looking for ways to merge and complement traditional modeling and simulation done in HPC with artificial intelligence applications. With years of experience adapting and incorporating new and novel technologies, HPC centers like HLRS are natural choices for this type of convergent research to take place.

Because of an increasing demand for access to graphic processing units (GPUs)—accelerators that are well-suited for machine learning tasks—HLRS recently worked with its partners at Hewlett-Packard Enterprise to add 192 NVIDIA GPUs to Hawk, previously a central processing unit (CPU)-only system. The addition will benefit users who need to generate large datasets to train neural networks, eliminating delays in their researcher that would otherwise come from moving data between different systems.

"This is a great step forward in helping us to augment traditional HPC codes with new, data-driven methods and fills a definite gap,” Beck said. “It will not only help speed up our development and research processes, but provide us with the opportunity to deploy them at scale on Hawk.”

-Eric Gedenk