High-Performance Computing Center Stuttgart

In response to the recent growth in AI investments in the United States and China, Europe has recently set out to develop its infrastructure and know-how for AI, a step that will be important for bolstering its research capabilities, industrial productivity, and global economic competitiveness. Growing awareness of AI has also led increasing numbers of scientists and engineers to begin exploring how it could enhance their research and technology development. Meanwhile, AI is becoming a key component in everyday technologies for fields as diverse as agriculture, finance, manufacturing, and the operation of self-driving vehicles, among many others.

Recognizing the growing demand from its community of academic and industrial HPC users, HLRS took steps in 2019 to expand its capabilities and resources for artificial intelligence, including procuring a new supercomputing system that is optimized for AI applications and initiating projects that are testing practical applications of AI. At the same time, philosophers and social scientists at the supercomputing center have been working to better understand and address questions related to the trustworthiness of AI-based decision-making.

HLRS is still early in its expansion into AI, but already it has been laying the foundation for advances at the convergence of HPC, high-performance data analytics, and artificial intelligence approaches such as deep learning. Focusing on real-world scenarios where they can have an impact, HLRS is exploring how such new methods and technologies can be combined to best support the center's users and open up new ways to address global challenges.

In the hype surrounding the recent AI "gold rush," some have speculated that artificial intelligence applications running on modestly sized computers could soon replace the more established field of simulation using supercomputers. Research methods being explored at HLRS, however, suggest that for the foreseeable future the two approaches will deliver the greatest benefit by being used in a complementary way.

Explained simply, artificial intelligence is a type of automated pattern recognition. Using deep neural networks — a computer science method modeled on how neurons in the human brain extract meaning and make decisions based on sensory input — applications for deep learning identify and compare differences in large collections of training data, revealing characteristics in the data that carry significance. In automated driving, for example, this might mean image-processing algorithms that distinguish pedestrians in the car's field of vision. Once these patterns have been clearly defined, AI systems use them as a model for decision-making — for example, how to avoid collisions during driving.

Developing a training model that an AI algorithm can use to "learn," however, requires the generation and processing of an enormous amount of data — a task that is ideally suited to high-performance computers. Simulating a car's field of vision as it moves through a city, for example, means simultaneously representing millions of features, and not just once but continuously over split-second time steps. Developing such a model is impossible without high-performance computers.

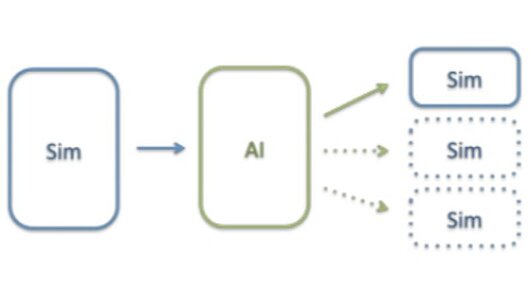

After an AI application combs through large datasets to develop a predictive model, it is then possible to convert it into a simplified AI tool that does not require the entire dataset, but only an understanding of the key parameters within it. In autonomous driving, for example, it is impossible for a car to carry a supercomputer onboard or rely on cloud technologies to communicate with a remote supercomputer fast enough to navigate rapidly changing road conditions. Instead, the model derived using HPC is converted into a low-power AI tool whose job is merely to observe and react based on key features in the data.

Such an approach is not only relevant for autonomous driving, but also for many kinds of AI applications. In agriculture, for example, AI connected to new kinds of sensors could help farmers optimize the timing of their planting and harvests. In engineering, inexpensive digital tools could monitor bridges on highways to alert maintenance staff when repairs are necessary. For any of these applications, however, researchers will need both supercomputing and deep learning to develop the models on which they are based.

While HPC is necessary to develop AI applications, artificial intelligence also holds promise to accelerate research based in traditional simulation methods. As HLRS Managing Director Dr. Bastian Koller explains, "HPC systems will continue to grow, which is good because it means we can tackle bigger problems. The troubling issue, though, is that the amount of data these larger systems produce is becoming unmanageable and it's becoming harder and harder to know what data is of value. We think that AI could be a natural fit to solve this problem."

In the field of computational fluid dynamics, for example, — an area in which many users of HLRS's computing resources specialize — scientists have developed high-precision computational models of complex physical phenomena like turbulence in air- and water flow. As supercomputers have grown in speed and power, they have made it possible to mathematically represent those properties at extremely high resolution. Producing these precise models, however, is computationally expensive, making it impractical for certain design optimization problems where it would be desirable to quickly compare many different simulations to find the best solution.

The arrival of AI offers a different approach. As more data has become available, scientists have begun exploring synthetic approaches using deep neural networks. Instead of trying to meticulously model an entire system based on physical principles, researchers have begun using deep learning to develop surrogate models. Here, they use a bottom-up, data-driven approach to create models that reproduce the relationship between the input and output data on which the model is based. Such surrogate models closely mimic the bahavior of a traditional simulation model, and even if they do not have the same precision or degree of explainability of traditional simulations, they can approximate those results much more quickly. For some problems, this offers huge advantages.

Koller also suggests that this data-driven approach could lead to novel insights. "One of the great opportunities for using AI to support traditional applications of high-performance computing is for identifying what I like to call the unknown unknowns," he explains. "There are sometimes dependencies in large data sets that we as humans don't see because we aren't looking for them. A machine methodically comparing data can often identify parameters within systems that have important effects that we would have missed, and that turn out to offer new opportunities we never would have thought to explore."

This perspective suggests that in the coming years AI and HPC are on a collision course in which new kinds of hybrid approaches combining both disciplines will offer new opportunities for research and technology development. HPC will produce the large datasets needed to develop robust models, deep learning will help researchers to analyze that data more efficiently, and HPC will use the results to create more robust models. Using these two approaches in an iterative way could thus help researchers make sense of complex systems more quickly.

Realizing this goal, however, presents technical challenges, particularly because these very different computational disciplines require different types of computer hardware and software.

Supercomputers optimized for simulation have grown to consist of many thousands of central processing units (CPUs), a type of computer processor that is good at quickly breaking complex calculations into parts, performing those smaller calculations rapidly in a parallel manner across many CPUs, and then recombining them to produce a result. HLRS's supercomputer — as of 2020 a 26 petaflop system called Hawk — is based on this model because it provides the best infrastructure for solving the most common simulation problems facing the center's users.

Artificial intelligence applications, on the other hand, have taken off due to the arrival of another type of processor called a graphics processing unit (GPU). Originially designed for video gaming, these processors work fastest for iterative programs in which the same operation is performed repeatedly with slight variations, such as in a deep learning algorithm using neural networks.

In addition to different hardware, HPC and AI utilize different programming languages. Whereas simulation on large HPC systems often uses Fortran or C++, for example, artificial intelligence applications are more commonly developed in Python or TensorFlow. Developing workflows that can easily communicate between different languages will thus also be important.

In 2016 as interest grew in the possibilities offered by big data, HLRS began exploring opportunities to better integrate HPC and high-performance data analytics. Working together with supercomputer manfacturer Cray Inc. in a project called CATALYST, HLRS has been testing a combined hardware and software data analytics system called Urika-GX for its ability to support engineering applications. The researchers already investigated the system's ability to integrate with Hazel Hen, HLRS's flagship supercomputer at that time.

By 2019, demand had been growing among HLRS's users not just for conventional data mining and machine learning, but also for deep learning using GPUs. In response, the center procured a new supercomputing platform, the Cray CS-Storm. A GPU-based system that comes with Cray's AI programming suite installed, the new system now makes it possible for HLRS to support research involving both HPC and AI under one roof.

Currently, HLRS has separate, dedicated systems for HPC and deep learning, each of which with its own architecture, programming frameworks, and job scheduler. In the future, Hoppe says, "the goal is to develop an integrated, holistic system for resource management and workflow execution that connects everything together in ways that make it possible to run hybrid HPC/AI workflows on a single platform."

At the same time that HLRS has been developing its technical infrastructure for AI, philosophers and social scientists in its Department of Philosophy of Computational Sciences have begun addressing a very different set of questions facing the field: namely, when is it appropriate to rely on machine learning systems and how can we ensure that decisions produced by AI reflect our values?

Deep learning algorithms that use neural networks to develop surrogate models, for example, function like "black boxes," making it extremely difficult to reconstruct how they arrive at their findings. Although the results are often useful in practice, this presents an epistomological problem for philosophers, forcing us to ask what we can say we actually know when presented with such results. As AI becomes more pervasive, playing roles in fields such as loan risk evaluation or prison sentencing that have direct impacts on people's lives, this limitation is disconcerting, particularly for nonscientists who are affected by AI but do not have the context to understand how it works.

During an HLRS conference in November 2019, a multidisciplinary gathering of researchers explored such questions. Meanwhile, because existing ethical guidelines have not had widespread impact, members of the HLRS philosophy department joined together with VDE, the Bertelsmann Foundation, the Institute for Technology Assessment and Systems Analysis at the Karsruhe Institute of Technology, and the International Center for Ethics in the Sciences and Humanities at Tübingen University to develop a more systematic framework for putting AI ethics principles into practice. As in standardization frameworks for other fields, this AI Ethics Impact Group is developing an enforceable set of orienting ethical guildeines for scientists, developers, consumers, and others affected by AI.

As Koller explains, HLRS's engagement with AI will continue to evolve with the field. "With the CATALYST project our goal was to produce success stories and to understand for ourselves what we need to do high-performance data analytics and deep learning. We learned a lot about the challenges and traps, and now we have gone a step further. We are now moving forward and identifying gaps that could be addressed by bringing in AI solutions. It's a process of learning by doing."

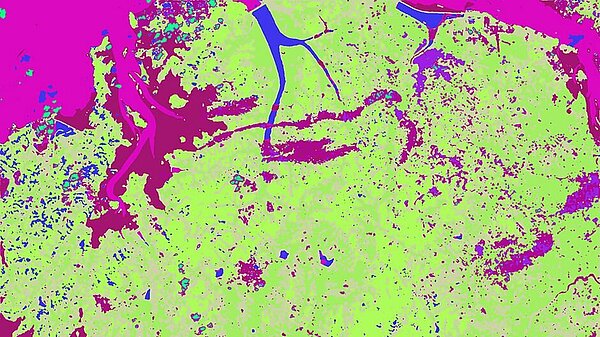

Although HLRS is still at an early stage in applying artificial intelligence methods, projects such as HiDALGO have begun providing a framework for exploring how AI could help scientists to identify the most meaningful parameters within large data sets. By focusing on pilot studies related to migration prediction, air pollution forecasting, and the tracking of misinformation over social media, researchers are working not only on the theoretical dimensions of this computer science challenge, but also directly on complex global challenges where high-performance computing and AI could have an impact.

At the same time, HLRS looks forward to making its new AI computing platform available for other researchers interested in exploring how these new approaches could offer new opportunities in their own fields.

"For many people AI is a Swiss Army Knife that will solve every problem, but it's not like that," Koller explains. "The interesting thing now is for people to find out what kind of problems can be solved using AI and how HPC can support these advances. We want to focus on real challenges from real life to show how you can best apply these new kinds of synergies."

—Christopher Williams